Menu

Statistical correlation is the phenomena evaluated by a correlation coefficient. Two random variables or bivariate data are said to be correlated if there is a quantifiable link between them, such as a statistical relationship. Plotting the change in average daily temperature and ice cream consumption, or the intensity of cloud coverage and rainfall precipitation in a specific region, is a simple example. We’ll see that the two variables tend to fluctuate in lockstep to some extent, implying some degree of interdependence. The reliance could be due to direct or indirect causality, or it could be completely fictitious.

A correlation coefficient derived for two variables, X and Y, is a measure of how much the dependent variable (Y) changes when the independent variable (X) changes (X). It measures the relationship’s strength as well as its direction. A positive correlation value indicates a direct association between the variables, whereas a negative correlation coefficient indicates an inverse relationship (when X is higher, Y is lower, and vice versa). A coefficient of zero indicates that there is no statistical link (orthogonality), whereas a value of one (or negative one) indicates that there is a perfect correlation (X and Y change in unison).

We can therefore distinguish between three basic types of correlation:

Below is an example of a negative correlation, along with the Pearson’s correlation coefficient (R).

Different sorts of coefficients quantify different types of correlations in terms of how the variables relate to one another, such as linear vs. non-linear, functional vs. non-functional, and so on (see Pearson’s vs. Spearman’s vs Kendall’s coefficient below). A coefficient of correlation, like any other statistic, is essentially an estimate with inherent uncertainty. Any correlation coefficient’s uncertainty can be quantified using a Z score, p-value, and confidence intervals. The three most common coefficients are supported by our correlation coefficient calculator, as well as uncertainty estimates for all of them.

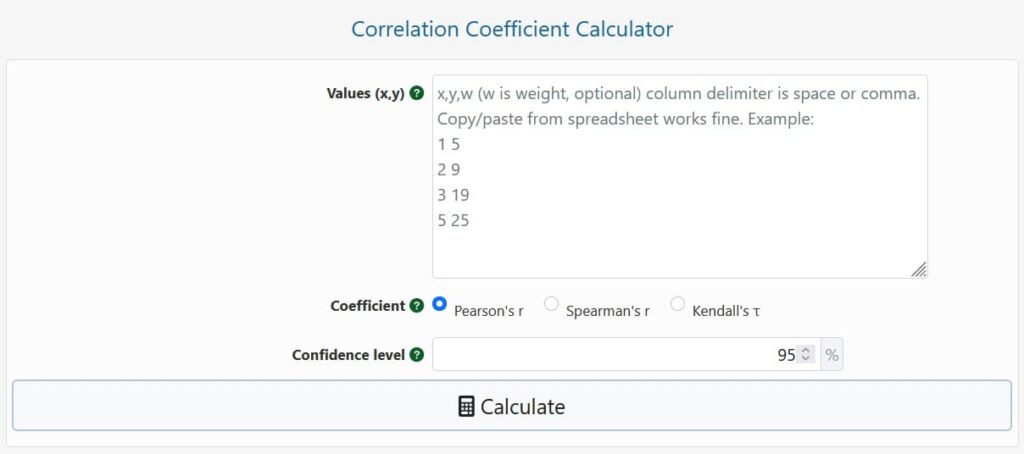

To use this correlation coefficient calculator, please enter your data: one column per variable, X and Y. Pair weights can be entered in a third column and will be applied to the values, resulting in a weighted correlation coefficient (only applies to Pearson’s coefficient). Because columns are separated by spaces, tabs, or commas, copying and pasting from Excel or another spreadsheet should be straightforward. There should be an equal number of values in each column.

Then you need to select the type of coefficient to compute. Coefficients supported in the calculator are:

The coefficient to choose will be determined by the sort of data you have and the type of connection that is assumed to underpin the ostensible dependence. This phase is critical for correctly determining whether or not there is a link and how strong it is. If you need help, the comparison of the three coefficients of correlation supported by this calculator may be seen below and should be of great help.

Finally, you can alter the generated confidence intervals’ default 95 percent confidence level. The Fisher transformation is used to calculate the p-values and confidence ranges for the Pearson and Spearman coefficients, which are held under the independence of observations assumption. Estimates of the Kendall rank correlation coefficient are subject to the same assumption.

The selected coefficient and sample size will be output by the coefficient correlation calculator. For all but the weighted Pearson’s coefficient, it will also produce the z score, p-value, and confidence intervals (two-sided bounds and one-sided bounds). The least-squares regression equation (regression line) of the form y = m* x + b is also included in the output, where m is the slope and b is the y-intercept of the regression line.

In order to make accurate judgments, the correct correlation coefficient must be chosen. When the assumptions of a statistical model are broken, the numbers become meaningless. Using the improper coefficient, such as Pearon’s coefficient while the relationship is nonlinear, can result in failure to capture a true correlation. “The improper choice can hide meaningful findings due to poor power or lead to misleading relationships due to an increased type I error rate,” Arndt et al. wrote. To assist you in making this decision, we’ve put up a table with the most important attributes and assumptions for the three most commonly used coefficients, as well as recommendations for when to use which.

Because it is frequently misunderstood that Pearson’s r requires that both X and Y be normally distributed, it is worth emphasising that this is not the case. “…the method of “product moments” is acceptable, whether or not the distribution follows the normal rule of frequency, as long as the regression is linear,” as Spearman points out. As a result, neither coefficient’s validity is based on distributional assumptions.

Normality is solely assumed for the sake of calculating relevant statistics, and if you’re interested in checking for deviations, you can use our normality test calculator. Remember that high p-values from normality tests could simply be due to a small sample size and tests that aren’t sensitive enough.

As you can see, choosing the appropriate decision isn’t easy because it necessitates knowing your data and comprehending the potential for dependency. Make sure you’re aware of the consequences of choosing one strategy over the other.

Several distinct coefficients are supported by the correlation coefficient calculator. The formulae that were utilised to calculate each of them are detailed here.

Pearson’s correlation coefficient formula

Pearson’s (population product-moment correlation coefficient, rho) is calculated using the following formula [1]:

where cov(X,Y) is the covariance of the variables X and Y, X (sigma X) is the population standard deviation of X, and Y is the population standard deviation of Y, and X (sigma X) is the population standard deviation of X. It is defined mathematically as the fit quality of least squares to the original data. It’s useful when we know the population mean and standard deviations, which isn’t always the case. As a result, the most commonly used formula is the Pearson sample correlation coefficient r equation.

The formula for Pearson’s r is:

Instead of population means and standard deviations, we use sample means and standard deviations, which is effectively the same as Pearson’s. The sample covariance cov(x,y) is represented in the numerator, while the sample standard deviations x and y are represented in the denominator. The summation operator is represented by the big operator. It’s easy to understand how correlation can be described as a standardised form of covariance using this equation.

Spearman rank correlation formula

Spearman’s rs (Spearman’s rank correlation coefficient) can be calculated using the following formula [2]:

The rank converted values of X and Y are denoted by rgX and rgY, respectively. As a result, Spearman’s correlation coefficient rs is simply the Pearson correlation coefficient computed using the rank values rather than the raw values of the two variables, which is why it can detect both non-linear and linear relationships between X and Y, as long as Y is a monotone function of X. In other words, the Spearman rs evaluates how well an arbitrary monotonic function can characterise a connection between two variables without making any assumptions about the variables’ frequency distribution [4].

Kendall’s tau formula

The Kendall rank correlation coefficient (tau), sometimes known as Kendall’s coefficient or just Kendall’s, is calculated using the following formula [3]:

The number of pairs is n, and the usual sign function is sgn(). When there are no ties in the data, the coefficient derived with the above equation is known as (A). The calculator employs a slightly modified equation (B) that correctly accounts for links across datasets [6].

Kendall’s tau measures the similarity of ranked transformed data orderings and can be translated as the chance that when X increases, Y increases rescaled from -1 to 1. The ease of interpretation and other desirable qualities – high power with good robustness, along with an intuitive interpretation as the probability that every pair of observations would have the same ordering on both variables rescaled from -1 to 1 [5] – make it a prime candidate f

The weighted Pearson correlation coefficient is calculated as follows:

The weighted covariance of x and y is divided by the product of the weighted standard deviations of x and y. Given a vector of weights w, the weighted covariance of x and y may be calculated as follows:

where mx and my are the weighted means of x and y calculated as before.

Using the same notation, the weighted standard deviation formula is:

It is calculated in the same way for y.

© 2021 All rights reserved

Ask Your Query